Summary Insight:

Enterprise AI fails without context. A centralized context engine and knowledge graph aren’t optional—they’re the fulcrum that turns AI from flashy demos into real business transformation.

Key Takeaways:

- Tools don’t scale AI—context does.

- Centralized context engine + knowledge graph = AI-native foundation.

- Without it, expect wasted cycles and stalled initiatives.

This article was originally published on Lex Sisney’s Enterprise AI Strategies Substack.

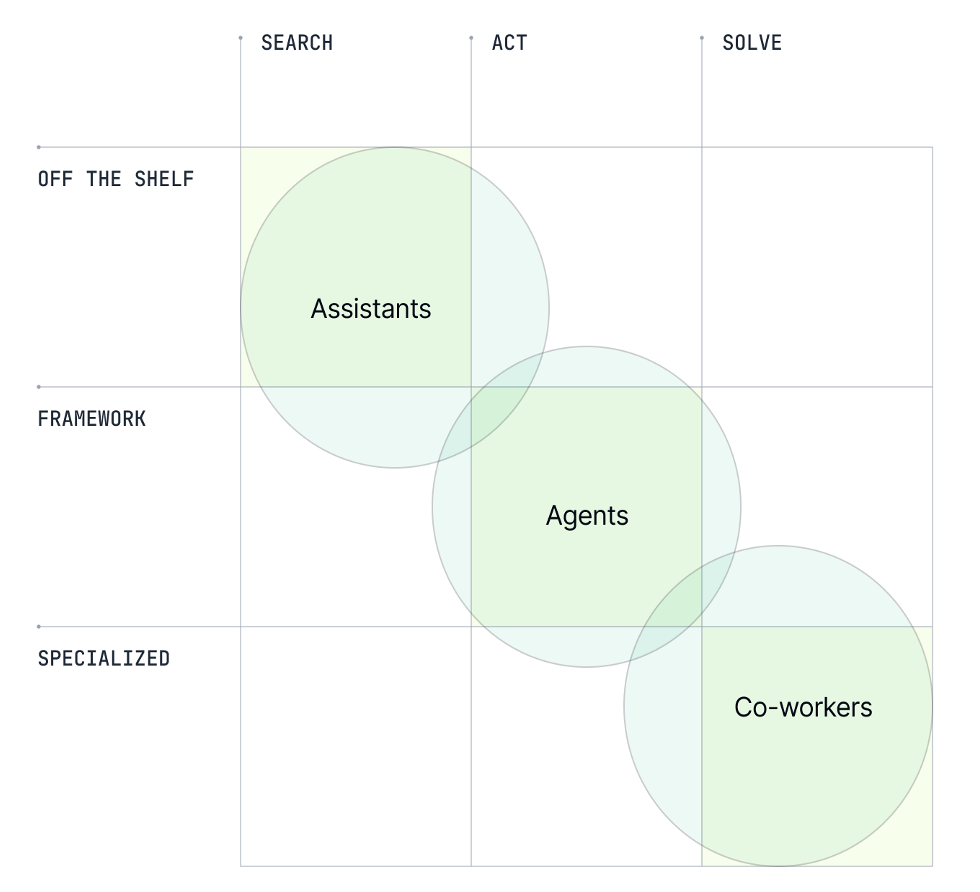

Earlier this month, PromptQL published a 3×3 matrix for evaluating GenAI projects. Their piece lays out the failures of enterprise AI to date and introduces a framework—what they call GAF—to cut through the noise and find success. It’s solid and worth reading before coming back here to see what they missed.

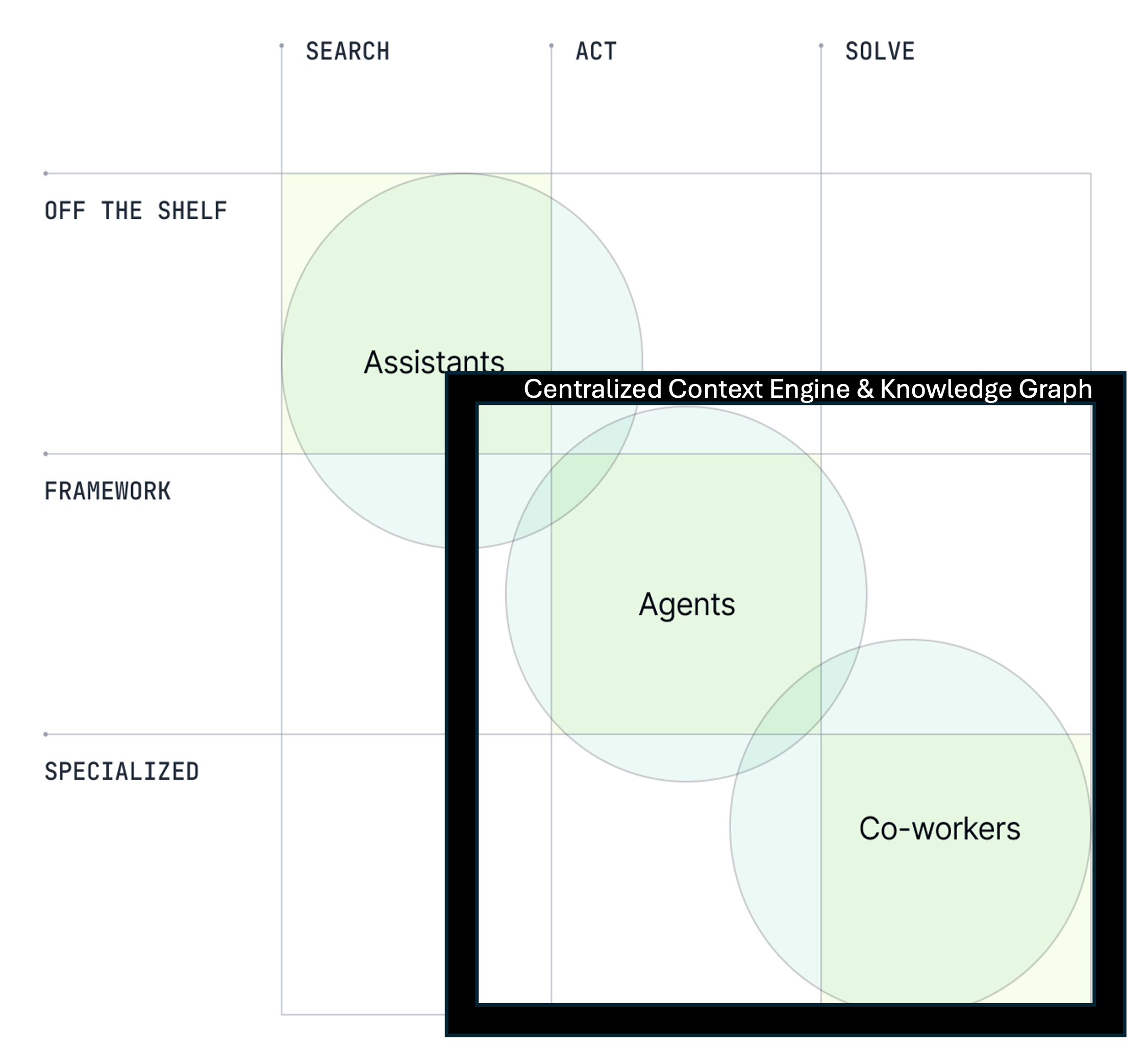

The GAF (Generative AI Framework) looks like this: the X-axis across the top shows the stage of AI—from Search → Act → Solve. The Y-axis on the left shows the type of solution—from Off-the-Shelf → Framework → Specialized. At each intersection, the authors provide a cheat sheet that maps what to look for: from AI Assistants to AI Agents to the evolution into AI Co-workers.

It’s neat and tidy, right? And hey—I’m a consultant. I’ve never met a matrix I didn’t like. A good matrix makes the complex simple, so credit to the authors for that.

But what’s missing?

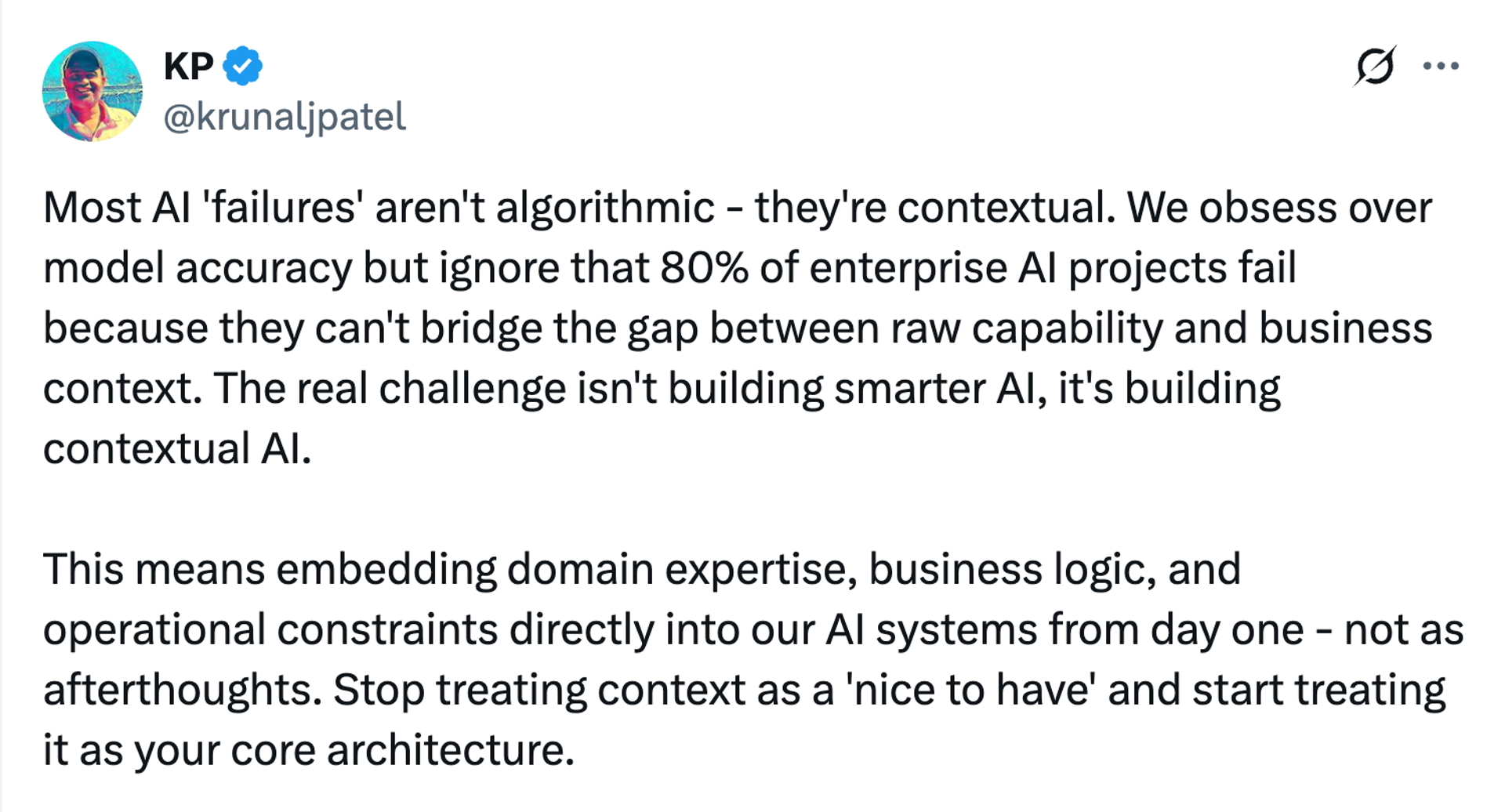

I had to chuckle reading their paper because they actually included an X post from @krunaljpatel that spells it out. Pay close attention to one phrase: “business context.”

I don’t know who KP is, but he nails it. And this is exactly what this Substack is about. With the right level of AI-enabled business context, your enterprise AI initiatives—beyond proof of concept—actually have a shot at transforming your company. Without it, you’ll be staring at this matrix in the near future, scratching your head, wondering what went wrong. Context is king.

So with that context (pun intended), I’d redraw the framework like this:

What am I trying to convey? That your company won’t make the leap from off-the-shelf AI assistants into the rapidly evolving world of team-based AI agents and co-workers unless you first build a foundation of centralized context. That’s the fulcrum of success or failure.

Let’s define some terms and then bring these definitions to life.

Context Engine: The process of transforming scattered inputs into centralized, structured knowledge.

Knowledge Graph: The linked memory representation that makes this knowledge navigable, queryable, and actionable.

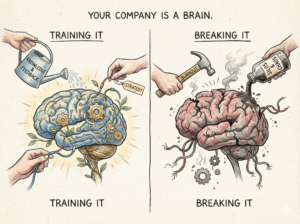

Think of AI as Really Eager Employee

The other day, one of the smartest people I know asked me what I meant by “context.” That’s when it hit me: if they don’t get it, I’ve got a problem. No wonder it’s been so hard to spotlight this missing piece of the AI puzzle.

Picture hiring a new employee—fresh out of school, eager to contribute. But because you’re busy, they’re never properly onboarded. They don’t know the company’s history, mission, org chart, business priorities, or how decisions really get made. Then you hand them a task like “go fix the XYZ protocol.” With no context, their well-meaning actions only add friction and entropy.

Or take a consultant. They show up with a canned playbook and start issuing instructions. But without understanding your company’s history, goals, structure, and decision-making culture, how effective will they be? Not very. In fact, their cookie-cutter approach will likely cost you a lot of lost time and money.

That’s how to think about AI. Don’t view it as just technology—see it as an employee. Employees aren’t deterministic, and neither are LLMs. Both search for patterns and apply prior context to current tasks. Without context, neither can succeed.

The AI Superpower is Built from Persistent and Shared Context

Now that we’ve established why context is so critical, let’s bring the pieces together and apply this mentality to your own company’s AI transformation.

To have a centralized context engine and knowledge graph means you’ve built a robust system that works in three layers:

- The Context Engine – This is the process of transforming scattered data inputs into centralized, structured knowledge. Think of every data source in your company—and even what lives in the minds of employees. History, goals, org structure, people, work styles, operational cadence, plus the nonstop flow from emails, Slack, meetings, Zoom, CRM, market research, and more. All of it must be unified into a structured system that AI can access, query, and act on. While it might sound impossible, it’s increasingly feasible to build quickly.

- The Knowledge Graph – This is the linked memory that makes captured knowledge navigable, queryable, and actionable. It enables deep semantic retrieval and reasoning, where AI can answer not just “what happened?” but also “how is it connected, why does it matter, and what should we do next?”

- The AI Execution Layer – Running on top of these layers, this is the reasoning and execution layer that brings it all together in a way that is scalable and governable. It turns context and connections into coordinated action.

The best way to envision this near future is to look at real examples of companies already adopting this architecture.

How an AI Native Company Operates

I’m an advisor to FostrAI, which is bringing this vision of running a company on a centralized context architecture to life. We’re now in beta launch with external clients—both start-ups and enterprises—but we’ve been operating this way inside our own company for several years. The difference between a legacy-era business and an AI-native one is striking. We’ve learned a lot along the way.

Let me share four recent examples that might sound familiar.

→ How to quickly onboard a new sales hire

→ How to align product and engineering teams

→ How to set up governance that actually works at scale

→ How to build continuous adaptability into the system

How to quickly onboard a new sales hire

Challenge: New hires in sales often struggle to ramp up quickly due to fragmented documentation, scattered training resources, and lack of real-time context on deals. Productivity can be delayed by months.

Legacy Approach: Companies rely on shadowing, tribal knowledge, and outdated wikis. New reps waste time piecing together insights and often make early mistakes with customers.

Solution: The centralized context engine delivers a curated knowledge base to every new hire—from deal histories and pricing strategies to objection-handling and competitive context. The system “onboards” them intelligently, surfacing just-in-time knowledge directly in their workflow.

Outcome: A sales hire who once took 90 days to contribute meaningfully is generating accurate proposals in their first week, supported by institutional memory in the context engine. Ramp-up time is cut dramatically, with improved confidence and lower onboarding costs.

User Experience:: A new sales rep types into the AI execution layer: “Show me how we position against Competitor X and the three most common objections with our responses.” The centralized AI pulls answers from deal history, pricing sheets, and coaching notes, giving the rep instant battle-tested context on day one.

How to align product & engineering teams

Challenge: Misalignment between product management and engineering leads to wasted cycles, mis-prioritized features, and organizational friction.

Legacy Approach: PMs define priorities in documents or tickets, engineers push back in meetings, and both sides argue from partial context. Customer needs, technical constraints, and strategic goals remain disconnected, so trade-offs are hidden until they blow up into rework.

Solution: By embedding company goals, customer insights, technical dependencies, and resource constraints into the centralized context engine, both teams operate from the same system of record. When a new idea or feature is proposed, the enterprise system automatically surfaces relevant context and forces trade-offs into the open. Alignment happens continuously inside the shared graph, not retroactively in meetings.

Outcome: Instead of PMs dictating and engineers resisting, both roles co-create prioritization with the same evidence in front of them. Trade-offs are explicit, debates move from opinion to data, trust increases, and cycle time shortens. Product velocity accelerates with less friction and rework.

User Experience: A PM drafts a feature linked to a customer outcome. The context engine automatically pulls in related support tickets, churn data, and affected OKRs. Engineers add cost and complexity signals, which the system maps back to goals. Both sides see the same trade-off dashboard — “if we do this, we delay that.” The alignment is visible, persistent, and baked into execution before a line of code is written.

How to set up governance that actually works at scale

Challenge: Governance checks, especially in regulated sectors, can slow business down. Compliance teams struggle to keep up, leading to reactive reviews, missed risks, and delayed product launches.

Legacy Approach: Manual review of regulatory requirements, fragmented reporting, and bottlenecks with compliance officers. Governance happens after development, forcing costly corrections.

Solution: Governance and compliance rules are embedded directly into the context architecture. When new features are proposed, AI cross-references them against compliance requirements in real time, flagging risks and providing documentation instantly.

Outcome: Governance shifts from manual oversight to proactive enablement. Compliance becomes part of the creative process, allowing faster, safer launches and giving leadership real-time visibility into risk and oversight.

User Experience: When a new feature is proposed, the AI automatically attaches the relevant compliance checklist from the Governance SOP and flags any missing documentation. The CTO can open the Action and instantly see: “All regulatory checks passed, 1 item outstanding: update audit log.”

How to build continuous adaptability into the system

Challenge: Companies struggle to adapt fast when markets shift. Signals from customers, competitors, and partners often reach leadership late, and strategy updates get stuck in communication bottlenecks. Teams drift in different directions, wasting cycles and missing opportunities.

Legacy Approach: Leadership announces new priorities at the top, which trickle down slowly through decks, memos, and meetings. By the time execution catches up, the window has closed and teams are frustrated.

Solution: With centralized context, signals from the edge — sales calls, support tickets, customer experiments, competitive data — flow into the system in real time. Leadership doesn’t just broadcast updates; it curates and weights the most important signals. The context engine then cascades those priorities through goals, tasks, and workflows, so teams can see how their daily work connects to the new reality.

Outcome: Adaptation becomes continuous rather than episodic. Teams aren’t waiting for quarterly re-orgs or long strategy offsites; they’re adjusting course inside their day-to-day tools as the system itself learns. Alignment is faster, communication overhead drops, and the business stays nimble.

User Experience: A support team (or support agent!) tags a recurring customer pain point in the linked CRM. The knowledge graph connects it to rising churn in mid-market accounts which surfaces it to product and leadership. Leadership elevates the priority, and the update cascades back into engineering sprints, sales playbooks, and marketing campaigns. In near real-time, every team sees not just the new focus but also the why—a direct line from customer signals to company action.

Summary

The core takeaway is this: to truly become an AI-native business, you need a centralized context engine and a knowledge graph built on your proprietary information.That’s the critical mental shift. Strangely, most enterprise AI initiatives still miss this.

With that structure in place, you’ll move faster, learn faster, and keep improving over time. Without it, your AI initiatives won’t scale — and competitors who solve this first will pull ahead.

There’s much more to unpack in future articles. Let me know which areas you’d like me to dive into next. Thanks for reading.