Summary Insight:

Cheap intelligence is everywhere. What separates winners from losers isn’t the tools on the shelf—it’s the architecture inside your business.

Key Takeaways:

- AI architecture—not tools—determines leverage vs. chaos.

- Match your stack design to your company’s lifecycle stage.

- The Semantic Layer—context engine + knowledge graph—is the true accelerator.

This article was originally published on Lex Sisney’s Enterprise AI Strategies Substack.

In the rush to adopt enterprise AI, it’s easy to chase tools, vendors, or headlines. The smarter move is to pause and ask: what kind of architecture will actually let my business thrive in the AI era?

That’s what this article is about. I’ll give you a metaphor and a mental model for enterprise AI architecture—because how we think shapes how we act. And if you want AI to create leverage rather than chaos, you need the right mental model guiding your build.

Enterprise AI architecture is the system that connects your company’s data, models, and workflows. Done right, it compounds intelligence and creates durable strategic advantage. Done wrong, it becomes another source of entropy. Your architecture must align with strategy, fit your lifecycle stage, and match your current level of AI adoption. Think of it as a living structure that evolves with your business.

Now, let’s go shopping…

The AI Marketplace

Picture two men in a grocery store aisle.

The first is a health and fitness coach who looks the part. Decades of research, discipline, and practice give him the knowledge and context to make smart choices. As he moves through the aisles, he knows what to pick up and what to avoid because his mental model is clear: he wants strength, vitality, and long-term health.

The second man eats whatever is cheap, salty, sweet, or fatty. He tells himself he’ll focus on health, but his habits betray him. He’s overweight, sluggish, and easily swayed by marketing. Without a strong framework or conviction, his choices are driven by impulse and packaging.

Both men face the same shelves. But over time, their results couldn’t be more different. Put them in a foot race and it’s no contest.

Now scale that metaphor up. The grocery store is the $2T—and rapidly growing—AI market. The men are two competing businesses. Both have access to cheap, abundant “packages” of superintelligence. The shelves are overflowing, and costs are dropping.

The “junk food” business is overwhelmed. They sample tools, encourage teams to experiment, but lack the framework to guide smart, strategic adoption. Their choices are reactive.

The “fitness” business navigates with discipline. They test tools too, but against a clear model of what their architecture must become. They know what to say yes to—and more importantly, what to say no to.

Both companies are already in a foot race. But in a world of near-infinite, compounding intelligence, the one with the stronger understanding will radically outperform the other.

That’s the metaphor. Now let’s break it down so you can craft your own mental model for AI architecture—one that keeps you focused, makes your choices smarter, and helps you win.

What’s on the Super Intelligence Shelves?

If you walk the aisles of today’s AI marketplace, here’s what you’ll find:

Aisle 1: Foundation Ingredients – LLMs and Base Models

The staples. Flour, eggs, rice. These are the core engines of intelligence—large language models in both:

- Closed-source (ChatGPT, Gemini, Claude, Grok): proprietary frontier models, powered by the largest AI factory build-out in history.

- Open-source (Llama 3, Mistral, DeepSeek): flexible, cheap, hackable, but they demand more work and infrastructure by you..

These ingredients are abundant. The shelves are overflowing.

Aisle 2: Connectors and Translators – Integration Tools

Adapters, mixers, blenders. This aisle is about plumbing—moving data between systems and models.

- Quick hooks: Zapier, Workato, Power Automate.

- Enterprise-grade bridges: TrueFoundry, Vellum, Tray.

They don’t add flavor themselves, but without them, nothing combines.

Aisle 3: AI-Enhanced Staples – Legacy Tools with AI Add-Ons

The brand names you already know, now fortified with AI.

- Productivity suites: Microsoft 365/Copilot, Google Workspace/Gemini, Canva.

- Enterprise platforms: Salesforce, SAP Joule, Adobe Firefly.

For companies tied to legacy vendors, these are the easiest upgrades—but they keep you inside someone else’s recipe box.

Aisle 4: AI-Native Delicacies – Purpose-Built AI Apps

The gourmet aisle. Tools born AI-first, designed to dazzle.

- Creative/media: Runway, Midjourney, Synthesia.

- Specialized apps: Harvey (legal), Lovable (apps), Lindy (agents).

They shine in modern architectures but can be tough to integrate into older kitchens.

We can’t predict the future, but one thing is clear: every business now has access to dirt-cheap, recursive, super intelligence that keeps getting exponentially better. It’s all mind blowing and hard to keep up with.

But here’s the catch—none of this abundance matters if your company doesn’t have the right internal AI architecture. Without it, you’ll never capture the leverage—and you’ll risk being commoditized out of existence.

So let’s turn from the shelves outside your business to the architecture inside it.

What Belongs in Your Internal Enterprise AI Architecture (your kitchen)

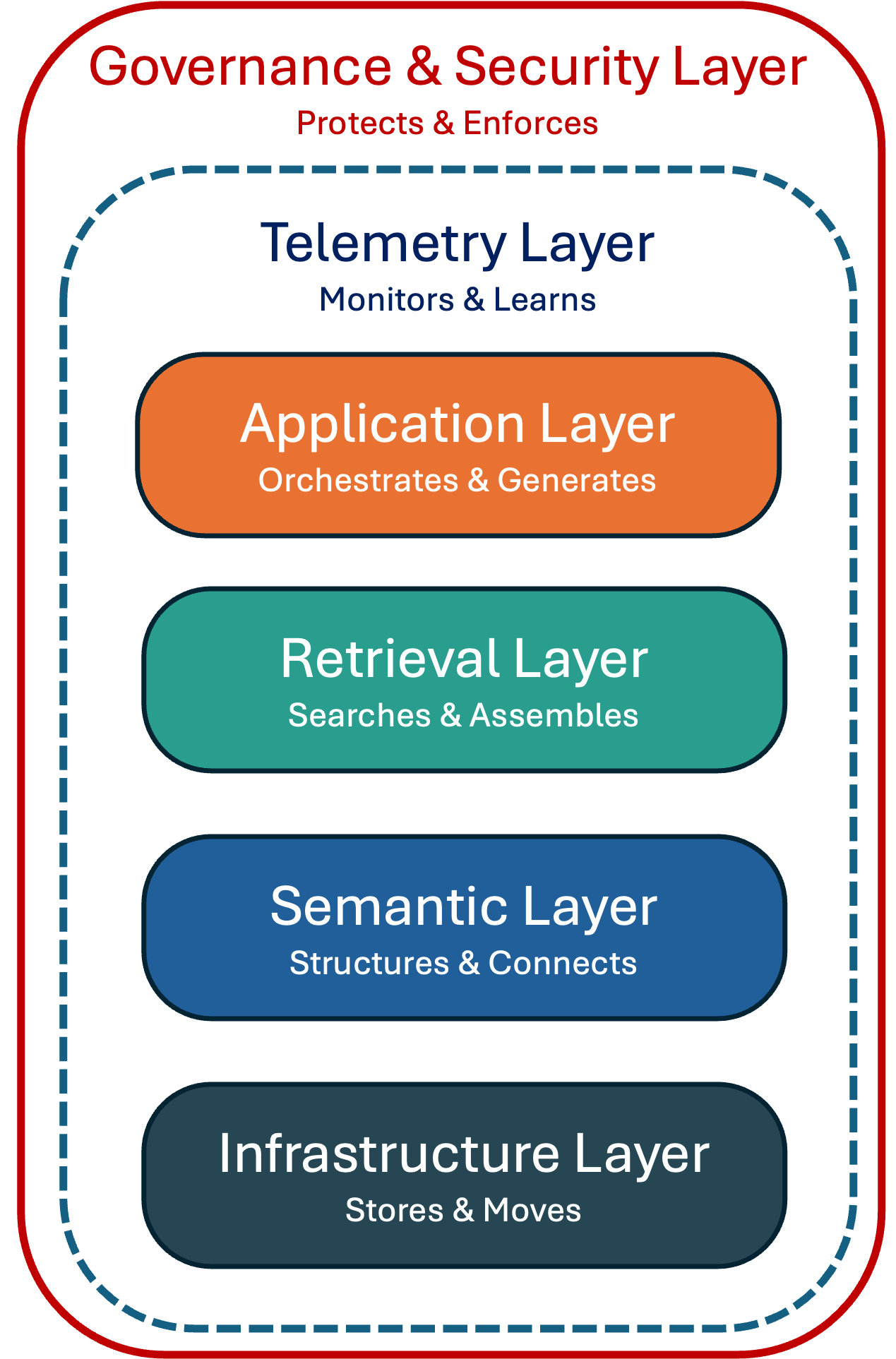

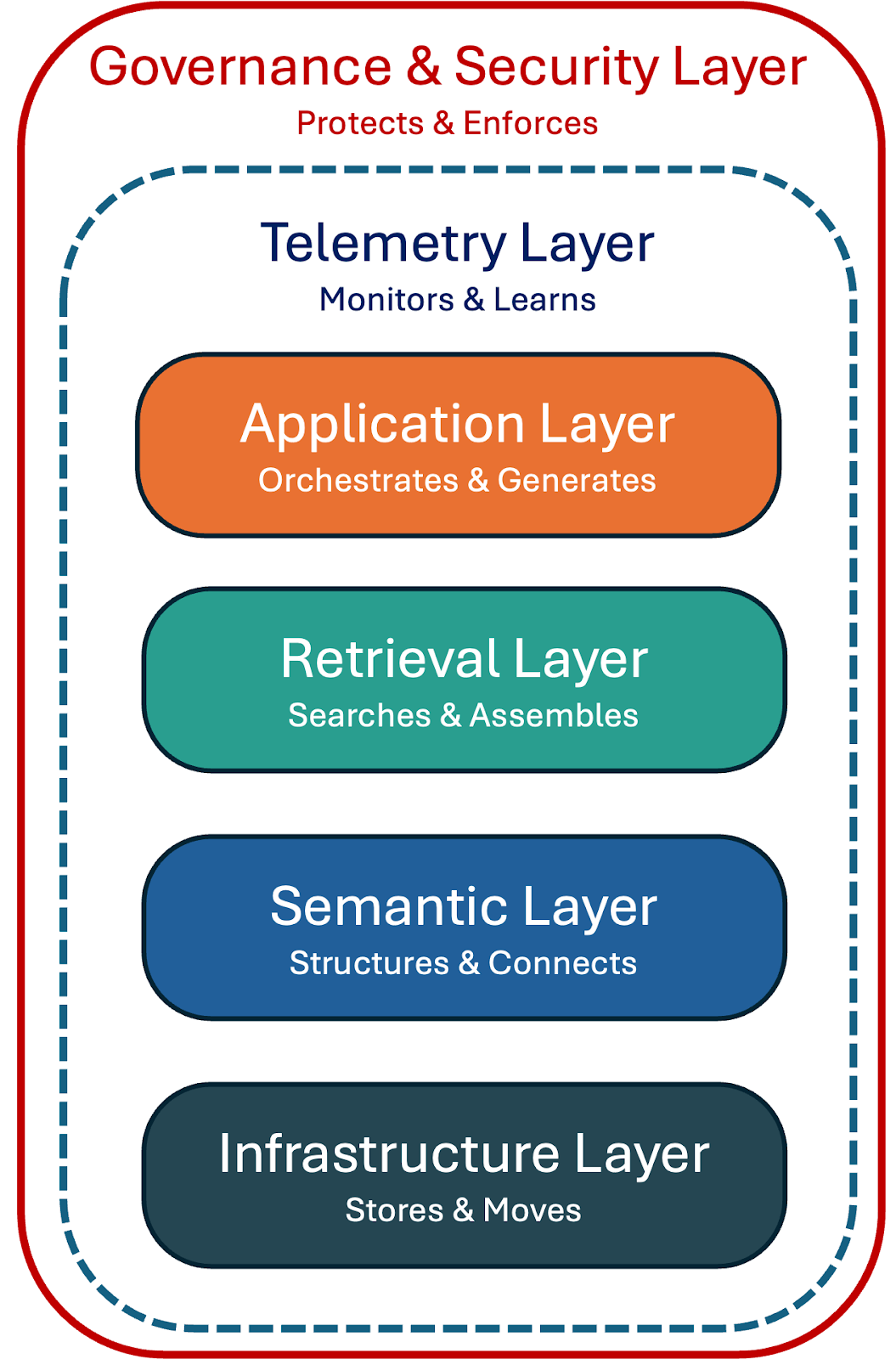

In a picture, here’s what belongs in your internal enterprise Ai architecture:

Don’t think of your enterprise AI architecture as a one-and-done project. It really should be thought of as a living, adaptive system.

Application Layer: Orchestrates & Generates →

Where users and agents interact with the system. Handles which model gets called, how outputs are generated, and how they’re delivered back into workflows.Retrieval Layer: Searches & Assembles →

The broker between apps and data. Manages search across indexes, enforces access controls, and assembles the “context pack” (inputs/outputs) that models consume.Semantic Layer: Structures & Connects →

Transforms raw data into structured, connected knowledge. Provides the system with memory, relationships, and persistent context.Infrastructure Layer: Stores & Moves →

The foundation. Cleans, stores, and moves enterprise data at scale. Ensures freshness, reliability, and performance for everything above it.Telemetry Layer: Monitors & Learns →

Continuous monitoring of performance, quality, safety, and cost across all layers, with feedback loops to improve outcomes and prevent drift.Governance & Security Layer: Protects & Enforces →

Policies, safeguards, and controls that surround the entire stack—ensuring compliance, access control, safety, and ethical use of AI across the enterprise.(loops back to) the Application Layer →

If you’ve been following my work, where would you place the highest leverage points in designing the enterprise AI stack for your business?

All the layers matter. But from my vantage point, the Semantic Layer—where your centralized context engine and knowledge graph live—is the true accelerator. Get this right, and you’ll move faster and smarter than the competition.

In the next section, I’ll break down each layer and share what to look for as you build out your own enterprise AI stack.

Application Layer: Orchestrates & Generates

This is the layer you see and touch. It orchestrates which model to use, generates content, and delivers outputs back into workflows. Think of it as the storefront in our grocery metaphor—where shoppers actually make their selections. But just like a storefront, it only works if it’s stocked and connected to the right supply chain behind the scenes. That’s where the next layers come in.

What to look for:

- Flexibility, not lock-in. Design the layer to route to the best model for the task at the best cost, rather than tying the company to a single vendor’s roadmap.

- Multi-modal leverage. Don’t just assume multimodal is covered because the models support it. The question is whether your applications and workflows can actually ingest and make use of text, images, code, audio, and video together.

- Human + machine co-working. The current state of tools don’t replace but augment human knowledge workers—the floor is “3× productivity per person” while retaining human judgment.

- Resilience. Build redundancy so that if one model/API goes down or prices spike, your applications don’t grind to a halt.

Retrieval Layer: Searches & Assembles

Behind the storefront is the broker—the retrieval layer. When a query comes in, this layer searches across indexes and data sources, routes the request to the right places, and assembles a clean “context pack” that the model can consume. Without it, your applications are guessing; with it, they’re grounded in real knowledge, filtered by access controls, and stitched into coherent answers.

What to look for:

- Grounding in your own data. Ensure retrieval is tied to enterprise knowledge and clean market insights, not just the open web.

- Hybrid search. Invest in search systems that bring multiple lenses together:.

- Keyword search → the classic “exact word match” approach (like Google in its early days). Fast, but brittle—misses nuance.

- Semantic / vector search → instead of exact words, it compares meaning. It uses embeddings (numerical representations of concepts) to surface results that are similar in context, even if the wording is different.

- Graph traversal → maps relationships between entities (people, processes, data, documents) and follows those links to bring back connected insights you wouldn’t see from text alone.

- Context assembly. Look for solutions that don’t just retrieve chunks but package them into coherent, governed “context packs.”

- Policy-aware retrieval. Access controls and compliance should be enforced at retrieval time, not bolted on afterward.

Semantic Layer — Structures & Connects

Of course, retrieval is only as good as the meaning it pulls from. That’s the job of the semantic layer. Here’s where your centralized context engine and knowledge graph live, transforming raw data into structured relationships. This is the memory and reasoning layer of your enterprise AI stack—the place where scattered signals become connected understanding. With a strong semantic layer, your business doesn’t just answer questions; it thinks in systems.

What to look for:

- A centralized context engine. This is where leverage lives—Fostr’s (FostrAI.com) approach of building a persistent knowledge graph on top of enterprise data is the right blueprint.

- Relationship mapping. Knowledge must be more than nodes; it should capture dependencies, forces, and flows to show how and why things influence each other.

- Versioning and governance. Ensure context is persistent so the system learns rather than re-learns.

- Integration across silos. If each department builds its own graph, entropy wins. You need one unified semantic layer feeding the enterprise brain.

Infrastructure Layer — Stores & Moves

Beneath it all is the foundation: your data warehouse, pipelines, and storage. This is the machinery that cleans, moves, and organizes enterprise data at scale. If it’s brittle, the layers above will falter. If it’s strong, the entire stack runs smoothly. Data infrastructure doesn’t get much glory, but it’s the bedrock that makes everything else possible.

What to look for:

- AI-optimized plumbing. Pipelines and warehouses should be designed for embeddings, vector search, and high-throughput inference workloads, not just analytics.

- Freshness and reliability. Out-of-date or corrupted data flows create garbage-in/garbage-out.

- Elastic scale. Infrastructure must flex with unpredictable AI demand without breaking budgets.

- Observability baked in. Performance and lineage tracking at the infrastructure level reduces entropy upstream.

Telemetry Layer — Monitors & Learns

Now, a stack without feedback is a black box. The telemetry layer closes the loop by monitoring performance, quality, safety, and cost. It shows you how the system is actually working—where retrievals are missing, models are drifting, or costs are creeping up. With telemetry, you can tune, prove, and defend the value of your AI systems. Without it, you’re flying blind.

What to look for:

- System-wide observability. Measure performance, cost, and drift across the entire stack.

- Feedback loops. Don’t just collect logs—instrument the system so it learns. Track retrieval quality, hallucination rates, and real user adoption signals. Then feed that data back into how models are prompted, how retrieval is tuned, and how workflows are designed. Without this loop, performance drifts. With it, the system compounds intelligence over time.

- FinOps discipline. AI costs can explode without monitoring. Track spend at the query/workload level.

- Trust metrics. CEOs need evidence—accuracy, compliance, ROI. Telemetry should prove the system is working and where it can improve. .

Governance & Security Layer — Protects & Enforces

And finally, surrounding everything is governance and security. This layer ensures access controls, compliance, and ethical use. It’s the shield that prevents misuse and the trust anchor that lets leaders scale confidently. Without governance, your company is set-up for systemic risk.

What to look for:

- Policy as code. Governance must be enforced in real-time, not just written in a manual.

- Contextual access control. Who can see what, under what conditions, should be baked into the architecture itself and must be instantly adaptable as business needs change.

- Regulatory readiness. Anticipate audits, data residency, and sector-specific compliance (HIPAA, GDPR, SOX).

- Ethical safeguards. Prevent misuse, bias amplification, and privacy breaches. Without trust, adoption collapses.

- Surrounds everything. Governance isn’t a department; it’s a layer that encompasses the entire stack, just like the Telemetry layer above.

How to Attack the Enterprise AI Stack (Lifecycle-Aligned Approach)

Just as every product and business moves through distinct lifecycle stages—Pilot It, Nail It, and Scale It—your enterprise AI architecture must evolve with your organization’s stage and level of AI adoption. Attempting to scale before nailing use cases, or building proprietary systems before de-risking experiments, is a recipe for failure and misalignment.

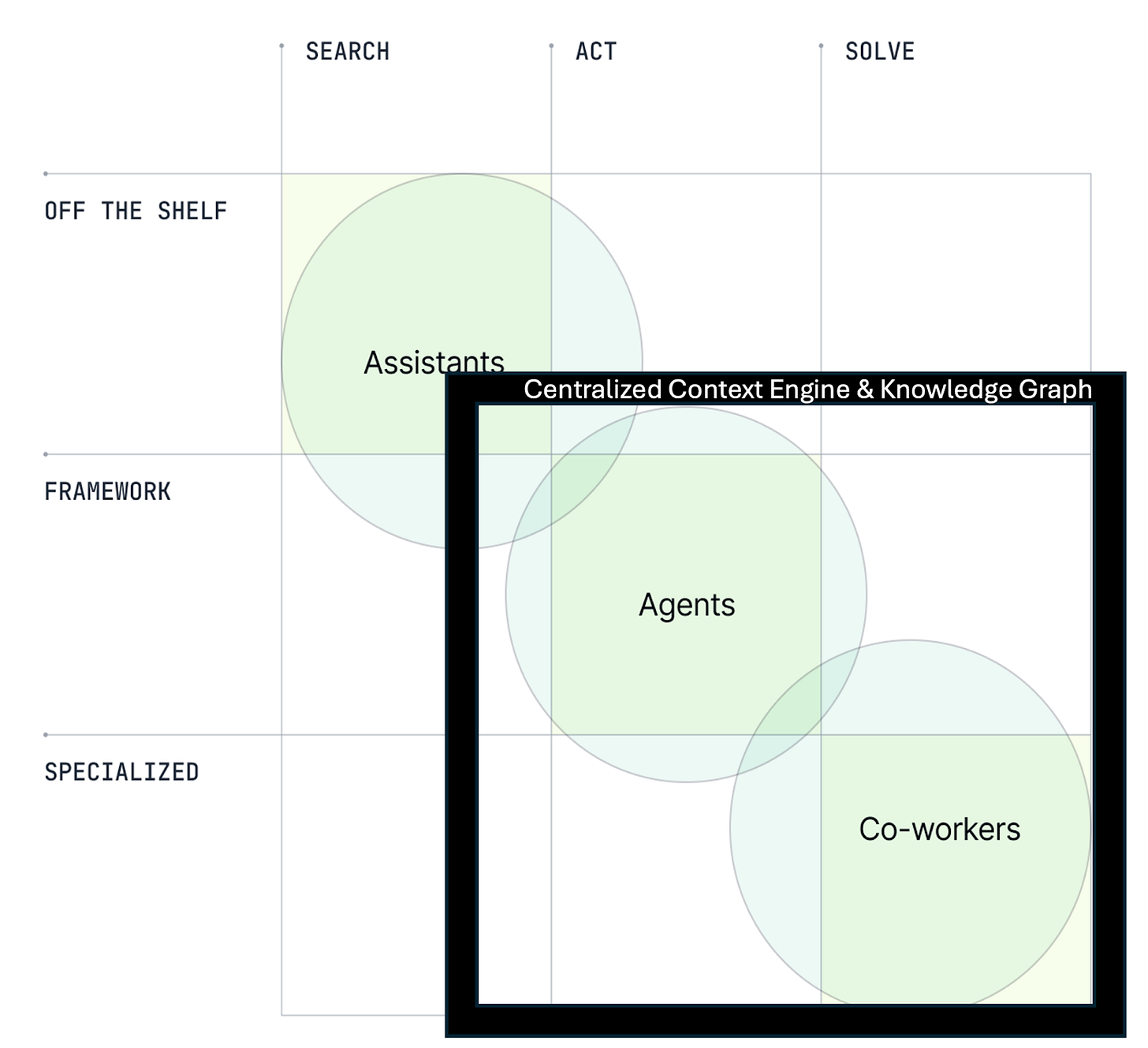

The diagram from The Viral 3×3 AI Matrix—and the Missing Piece helps visualize this journey. As you move from Assistants → Agents → Co-workers, different parts of the stack must come alive.

Stage 1: Pilot It — Assistants (Experiment with Off-the-Shelf, Learn)

At this stage, your company is in the upper left of the matrix. The goal is to run fast, low-risk experiments and build team understanding—not build the whole system. You’re leaning on Assistants (off-the-shelf models and copilots) while starting to lay the groundwork.

- Application Layer: Comes alive first. Experiment with off-the-shelf copilots (ChatGPT, Claude, Gemini) to boost productivity and test use cases.

- Infrastructure Layer: Get basic plumbing in place—data pipelines, storage, and cloud access—so experiments have a foundation.

- Telemetry Layer (lightweight): Start instrumenting usage logs, baselines, and simple feedback loops.

🔧 What to do:

- Mix AI copilots into workflows with low-code tools).

- Form an internal “AI Pilot Team” with a single-threaded-owner to run focused pilots.

- Track entropy signals: What breaks? What repeats?

🎯 Your goal: Prove that AI can improve decision speed, reduce friction, or enhance productivity.

Stage 2: Nail It — Agents (Build Your Semantic Core)

Once experiments show promise, it’s time to codify the learning and move toward scalable patterns. This is where Agents come in: systems that don’t just answer but act—grounded in your company’s context.

- Semantic Layer: Comes alive. Build your centralized context engine and knowledge graph to create a persistent, structured memory of the business.

- Retrieval Layer: Operationalize enterprise search, access controls, and context assembly so models are grounded in your proprietary data.

- Telemetry Layer (full): Move from basic logs to robust monitoring of performance, hallucination rates, and adoption.

- Governance & Security Layer (early): Begin embedding access control and policy guardrails as you codify processes.

🔧 What to do:

- Stand up your semantic graph + retrieval stack.

- Pick AI-native apps that integrate cleanly into your evolving architecture.

- Use orchestration frameworks to manage workflows.

- Define decision boundaries: What can AI automate? What stays human?

🎯 Your goal: Convert scattered pilots into a coherent operating system—anchored in your business model, not just vendor tools.

Stage 3: Scale It — Co-workers (Systematize and Govern)

Now AI is no longer just an assistant or an agent. It’s operating as a Co-worker—a durable part of your workflows and decision-making. At this stage, the full architecture must be alive, resilient, and governed.

- Governance & Security Layer: Fully operational. Policies-as-code, compliance, and ethical use must surround the system.

- Telemetry Layer: Single-source-of-truth. Continuous monitoring of quality, performance, safety, and costs feeds back into optimization.

- All Layers: Application, Retrieval, Semantic, Infrastructure—working in concert as a living, adaptive system.

🔧 What to do:

- Implement robust FinOps and telemetry down to the query/workload.

- Codify governance across ethics, compliance, and access.

- Optimize model routing and retrieval for both performance and cost.

- Run cross-functional AI Scorecards to prioritize system improvements.

🎯 Your goal: Transform your AI stack into a durable competitive advantage—an adaptive system that compounds leverage and withstands regulatory scrutiny.

By aligning your AI architecture with your company’s lifecycle stage, you prevent premature scaling, focus energy on high-leverage moves, and synchronize execution with strategy. As Organizational Physics teaches: structure must follow stage—and with AI, that principle matters more than ever.

Summary

Your enterprise AI architecture isn’t just another IT project—it’s the blueprint for how your business compounds intelligence and creates durable advantage. The temptation is to chase tools or bolt on features, but the real leverage comes from building an architecture that evolves with your lifecycle stage and integrates every layer into a coherent system.

The highest payoff lies in your Semantic Layer—your centralized context engine and knowledge graph—because that’s what turns scattered data into strategic intelligence unique to your business. Surround it with the right architecture and you don’t just “use AI.” You create a living, adaptive system that learns, scales, and compounds over time.

The shelves of the AI marketplace are overflowing. Every competitor has access to the same cheap, abundant intelligence. What will separate the winners from the rest is not what they buy off the shelf, but the architecture they build inside.

Frequently Asked Questions from CEOs

There’s endless noise about building an enterprise AI stack. I tried to write this article for smart, non-technical CEOs who need a clear mental model to engage their CTO or CIO. As a bonus, I’ll share the top five questions CEOs ask me when navigating these conversations.

- Where is RAG?

Retrieval Augmented Generation (RAG) isn’t its own layer—it’s a capability that lives in the Retrieval Layer, powered by the Semantic Layer underneath it. To continue with the kitchen metaphor, RAG is a protocol to try to give a chef the right ingredients before they cook. - Where is MCP?

Model Context Protocol (MCP) also isn’t a layer—it’s a plumbing standard that sits inside your Application + Retrieval layers. Like setting a universal kitchen language so chefs, waiters, and suppliers can all coordinate without confusion. - Where is A2A?

Agent to Agent (A2A) is a communication protocol that lets AI agents talk to each other directly, share tasks, and collaborate. It sits mostly in the Application Layer but can touch the Retrieval Layer. Like giving walkie-talkies to your kitchen staff so they can coordinate in real time without going back through management. - How Should We Design Governance?

Governance and security are essential—they protect your business from systemic harm. However, you can’t leave them to generative AI on the fly. Controls must be coded deterministically: hard rules and access permissions embedded in your data layer.The cliché says “governance from day one.” In reality, that slows you to a crawl. The smarter move is to bring governance online in the mid Nail It stage when you’re building the rest of your AI enterprise stack. Too early, and you’ll have a very secure system that produces no business value. - Can’t We Outsource This?

Many enterprise AI vendors in the grocery store shelves want to own the whole stack for you. “Why not just hand it all to Microsoft, OpenAI, or Salesforce?”Yes, you can outsource pieces—but not the Semantic Layer. This is where your proprietary context engine and knowledge graph live. Over time, this layer will hold the majority of your business value. It’s the blueprint for how you create differentiated value in the marketspace. You can host it with a third party, but you can’t give up control nor give away your proprietary context.

📌 P.S. I’m an investor and advisor to FostrAI because it was built from the ground up to move businesses quickly through every layer of the stack. With Fostr, you retain your own data and build a proprietary semantic layer that becomes your competitive edge. If you’d like to test drive Fostr, the first step is joining our private AI CEO Slack channel. DM me for an invite.