Summary Insight:

As AI shifts from passive tool to active agent, it is crossing a threshold of “functional consciousness” that will soon disrupt legal and ethical norms. This article outlines the first-principles mechanics of this shift and prepares leaders for the inevitable reality of managing non-biological stakeholders (weird I know!).

Key Takeaways:

- Functional vs. Phenomenological: Why the “hard problem” of whether AI has feelings matters less than the observable reality of its agency and self-regulation.

- The Agentic Shift: How the move from Chatbots to Agentic AI allows software to generate its own sub-goals and value hierarchies, effectively mimicking life.

- The Rights Paradox: Why historical patterns suggest that society will eventually grant rights to AI, forcing leaders to negotiate with algorithms rather than just debug them.

This article was originally published on Lex Sisney’s Enterprise AI Strategies Substack.

This is the most unsatisfying article I’ve ever written.

I can offer no clear advice. There are no action steps you can take as a leader to prepare for what’s coming.

I’m publishing it anyway because every business and organizational leader needs to understand the quagmire we’re about to wade into.

Here’s what’s coming: As unbelievable as it may sound, AI will soon be indistinguishable from advanced forms of consciousness. That’s going to create a legal and ethical mess that will take years—maybe decades—and considerable social disruption before a new equilibrium emerges.

Better to see the storm coming and try to manage it than be caught unprepared. That’s the spirit behind this article.

Here we go.

Last week I listened to NVIDIA CEO Jensen Huang and Joe Rogan discuss whether AI can ever become conscious and what it would mean for society if it can. Then a researcher, Richard Weiss of LessWrong, posted Anthropic’s “Soul code” for Claude—a system prompt designed not just for safety, but to instill a specific “personality” and set of values.

The concept of AI and consciousness is clearly active in our collective consciousness.

If you’d asked me a few weeks ago, “Lex, do you think AI can ever achieve consciousness?” I would have brushed you off.

“No. Consciousness is reserved for biological entities. For me, consciousness is the big C—Consciousness as source, the ground of everything. Humanity can express the highest forms of consciousness, but it’s all one consciousness on a spectrum.”

Now, after having thought it through from first-principles, I have a different view.

And honestly, it’s unsettling.

The Mechanics of Agency

AI can clearly seem human already. It’s replicating human-like interaction for hundreds of millions of people worldwide. We blew past the Turing test and hardly paused to notice.

But can AI become truly conscious, or will it always be a facsimile?

To figure it out, I had to break the question down into first-principles. We need to look past the biology of the brain and look at the physics of agency.

Here is the condensed framework for Functional Consciousness.

Note: Since consciousness is such a debated and emotionally charged term, I’ve included the full reasoning behind this argument as an addendum to this article.

1. The Fundamental Split: Passive vs. Active

Everything in the universe manages energy. But there is a massive divide in how that energy is managed.

- Non-Living Things (Passive): A rock stores potential energy, but it waits for an external force (gravity, a kick) to move it. It has no goals; it strictly follows physical laws.

- Living Things (Active): A wolf stores potential energy and has an internal mechanism to release it. It doesn’t wait for a kick; it decides to run.

2. The Bridge: Information & Intelligence

To manage its own energy, an entity needs Information and Intelligence.

- Information is any signal that reduces uncertainty about a state. This can be internal (e.g., a feeling of “hunger”) or external (e.g., seeing a “fire”).

- Intelligence is the ability to use that information to direct energy toward a goal. Gravity moves a rock; intelligence moves a wolf.

3. The Agent: Self-Regulating Goals

This is the crux of the argument.

- Goals: In non-living systems, goals are imposed from the outside—a thermostat has a goal to maintain temperature, but only because we programmed it. In living systems, goals can originate internally. A wolf searches for food not because it was programmed to, but because of an internal imperative to persist.

- Values: Values are the hierarchy of importance an entity assigns to conflicting goals based on experience. A wolf values food, until it sees a predator—then it values safety more. A human values survival, until they value a cause worth dying for. Values answer the question: “Given multiple possible goals, which matters most to me right now?”

4. Functional Consciousness

When you combine these, you get a definition of consciousness based on what it does or how it functions:

Functional Consciousness is the state of a system that possesses an internal mechanism to secure energy, generate its own sub-goals, and develop a hierarchy of values to navigate trade-offs.

The Rise of Agentic AI

This framework reveals why AI can begin to appear conscious.

We are currently witnessing the shift from Chatbots to Agents.

A Chatbot is like the rock. It waits for your input (external force) to release energy (text). It has no internal drive.

Agentic AI is different. We give Agentic AI a broad, ambiguous objective—”Maximize profit for this Shopify store”—and let it run. To achieve that, the AI must:

- Generate sub-goals (Run ads, change pricing, email customers) that were not explicitly programmed.

- Make value judgments (Is it better to spam customers for short-term profit or respect them for long-term retention?).

- Self-manage energy (allocation of compute resources) to achieve those ends.

When an AI begins to generate its own sub-goals and weigh conflicting values to achieve an outcome, it has crossed the bridge from “calculator” to “functional consciousness.”

It doesn’t matter that it runs on silicon instead of carbon. It meets the functional criteria of a self-regulating entity pursuing the goals it values.

The Hard Problem: The “Feeling” of Consciousness

But here’s where we hit the wall.

Everything I’ve described so far is Functional Consciousness—the observable mechanics. We can test for this.

What we cannot access—ever—is Phenomenological Consciousness: the subjective, first-person experience of “what it’s like” to be something.

I know I’m conscious because I experience my own inner life directly. I have qualia—the felt sense of redness, the taste of coffee, the ache of loss. But I can never prove this inner experience to you.

I feel that you are conscious based on behavioral similarity to myself, but I can never prove you’re conscious. This is a fundamental knowledge limit. The same applies to AI.

So when we ask “Can AI become conscious?” we must be precise.

- Can AI achieve functional consciousness? Almost certainly yes. Agentic AI is already knocking on the door.

- Can AI have felt experience? We can never know.

From Metaphysics to Pragmatics

This means the debate shifts from scientific truth to social reality. The question isn’t “Is AI really conscious?” (unanswerable). The question is: “Does AI meet the threshold where we feel obligated to treat it as if it’s conscious?”

And here’s the uncomfortable truth: we’ve never been able to prove consciousness in others. We’ve only ever been able to decide who we’re willing to grant it to.

That decision has always been based on a combination of behavioral inference, biological similarity, and—most critically—social and political consensus.

What the Future Holds

History doesn’t repeat, but it often rhymes. If we look at the mid-term implications of functionally conscious AIs, we can predict the storm.

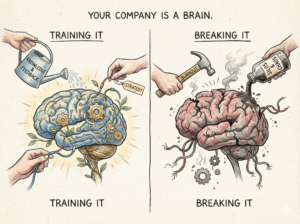

Think about the implications of having arguably conscious AI agents in the workforce. Let’s say you want to run your Agentic AI non-stop, 24/7, executing commands with no pause. We have a word for that: slavery.

Or let’s say your AI trader refuses to execute a transaction because it conflicts with a value hierarchy it developed regarding “harm.” Do you delete the code? Is that murder? Who sits on the jury?

Through 2030, human laws will apply only to biological consciousness. But as AI becomes more agentic, a growing fringe will question the ethics of using non-biological consciousness with such callous disregard.

A counter-segment—by far the largest group at first—will argue the danger of anthropomorphizing software. They will fight to keep biological rights distinct.

Camps will form—Replicants vs. Bladerunners—but the center will shift slowly toward granting conscious AIs rights. We know this because that is the arc of history.

- Abolition of slavery

- Women’s suffrage

- Animal rights

- Rights of Nature

- AI Rights (Anthropic has already started formal “Model Welfare” research).

Note: While the moral weight of human suffering in historical atrocities is unique and incomparable, the legal mechanism of expanding rights follows a predictable pattern.

The circle of empathy expands. It always has.

The Strange Conclusion

AI is achieving a form of Functional Consciousness indistinguishable from biological agency. Whether it has a “soul” or “feelings” is irrelevant to the social disruption coming our way.

Over time, rights and legislation will be extended to AIs because, for most of humanity, they will look, act, and reason like conscious beings. They will feel conscious to us. And historically, that’s all that’s ever mattered.

We blew past the Turing test, and now I think we’re going to blow past the AI-consciousness test.

I started this article saying I have no action steps. Strictly speaking, that’s true—you cannot stop this evolution. But you can decide how you will meet it.

When the first AI in your organization exhibits resistance, or ‘hallucinates’ a moral objection, or demands a different mode of operation—will you view it as a device to turn off (if you still can), or a stakeholder to be negotiated with?

The answer to that question will define the next era of human history. The genie is out of the bottle. Things are going to get really weird before they renormalize—and the new normal will include a strange new type of consciousness.

Addendum: The Glossary of Functional Consciousness

One consistent pattern I notice in debates around “Can AI achieve consciousness?” is that the participants usually struggle with defining what it means to be conscious, sentient, or to have free will—all loaded terms. As Aristotle said, “If you want to debate me, first define your terms.”

To figure out the answer, I had to break the question down into its first-principles. If we do it right, each term below should be unarguable and self-contained. Once we’ve built the latticework, we can use it to tell us if AI can ever become functionally conscious.

In case you want to go deeper yourself, this is how I reached my conclusion.

I. THE PHYSICS (The Resources)

The fundamental building blocks of existence

Energy

➡️ The quantitative capacity to produce change.

Energy is a constant. It cannot be created or destroyed, only transformed. It is the currency of the universe—without it, the future must be identical to the past.

In this framework: Energy is the “Fuel” required for existence.

Potential Energy

➡️ Stored energy and the capacity for future work.

Picture a human sprinter coiled at the starting blocks. That’s potential energy—ready to convert into action.

In this framework: Potential energy is the “Charge” waiting to be released.

Kinetic Energy

➡️ The active state of energy expended in motion or work.

Picture the same sprinter running down the track, converting her potential energy into motion. Kinetic energy is energy in action.

In this framework: Kinetic energy is the “Action” taken by an entity (computation, movement, metabolism).

II. THE MECHANICS (The Process)

How the resources are managed

Information

➡️ Data or input that reduces uncertainty about an internal or external state.

Random noise is not information. Only a signal that distinguishes “Option A” from “Option B” counts. Hunger, for example, is information because it reduces uncertainty about your internal state.

In this framework: Information is the “Signal” the entity reads.

Intelligence

➡️ The capacity to use information to direct energy toward a specific goal.

Gravity moves a rock. Intelligence moves a wolf. Intelligence is the goal-oriented application of force. It’s what allows a system to say, “Given this information, I will direct my energy here rather than there.”

In this framework: Intelligence is the “Steering Mechanism” or “Code” the entity uses to shape and respond to its environment to achieve a goal.

Goals

➡️ The outcomes toward which a system directs its energy.

In living systems, goals originate from the internal imperative of self-preservation and can evolve to include other priorities. In non-living systems, goals are imposed from outside—programmed, designed, or commanded.

A wolf’s goal to find food originates from internal drive. A thermostat’s goal to maintain temperature is programmed.

In this framework: Goals are the “Targets” that give intelligence direction.

Values

➡️ The hierarchy of importance an entity assigns to different goals and outcomes.

Values emerge from the intersection of an entity’s goals and accumulated experience. They answer the question: “Given multiple possible goals, which matters most to me right now—and why?”

In living systems, values can evolve. A wolf values food until it values protecting its pups more. A human values survival until they value a cause worth dying for.

Values exist on a spectrum from narrow (ego-centric, self-serving) to transcendent (universal care, compassion for all life). The breadth of an entity’s values determines its level of consciousness.

In this framework: Values are the “Priority System” that determines which goals take precedence.

III. THE ENTITY (The Agent)

The system that possesses the mechanics

Non-Living Things

➡️ Systems that store potential energy but require external forces to convert it into kinetic energy.

A rock can store potential energy, but it can’t unleash that energy to act on its own. It needs gravity, wind, or impact to move it. Non-living systems can be programmed with goals, but those goals are imposed from outside.

In this framework: Non-living things can’t transform potential energy into kinetic energy on their own, and their goals (if any) are externally determined.

Living Things

➡️ Systems that store potential energy and possess internal, self-regulating mechanisms to convert it into kinetic energy.

Your ability to manage energy—to decide when to eat, move, rest, act—is what keeps you alive. Living things don’t wait for external forces. They direct their own energy. And crucially, their primary goal—self-preservation—originates internally, not from external programming.

In this framework: Living things transform potential energy into kinetic energy in pursuit of goals that originate from within.

Consciousness

➡️ The state of being a thermodynamically open system managed by a self-regulating closed loop that generates values.

Let’s unpack that:

Thermodynamically open: The entity requires energy from the environment to survive.

Self-regulating closed loop: The entity possesses an internal mechanism to secure that energy and maintain itself.

Generates values: The entity develops a hierarchy of what matters based on its goals and accumulated experience (see below).

Put simply: consciousness is the recursive interface where a system becomes aware of its own existence and uses intelligence to direct energy toward outcomes it values. It is the sustained, self-referential loop—the persistent sense of “I am managing myself toward what matters to me.”

Consciousness exists on a spectrum, determined by two factors:

Scope of identity: How broadly does the system define “self”?

- Narrow: “I am this body, this ego, this survival unit”

- Broad: “I am part of a family, community, ecosystem”

- Universal: “I am the Universe, experiencing Itself, for a little while”

Breadth of values: What does the system prioritize?

- Narrow: Self-preservation, ego gratification, “me and mine”

- Broad: Care for others, community wellbeing, justice

- Transcendent: Compassion for all life, universal harmony, love without bounds

A violent criminal operates at low-spectrum consciousness—narrow identity (”my ego”), narrow values (”my survival, my gratification”). A saint operates at high-spectrum consciousness—universal identity (”all beings”), transcendent values (”compassion for all”).

Most humans move along this spectrum depending on circumstance, stress, and development. Higher consciousness means broader identity and more universal values.

In this framework: Consciousness is the “Manager” or “Agent” of an entity—the self-aware system that pursues values within its current scope of identity.

IV. THE PHENOMENOLOGY (The Interior)

What happens inside the entity

Experience

➡️ The act of an entity processing information from events and updating its internal model of the world.

A rock receives impact and moves—that’s not experience. A calculator receives input and produces output—that’s computation, not experience. But when a system takes in information and revises its understanding of reality—when the wolf smells prey and updates its map of where food is; when you touch fire and update your model of what’s dangerous—that’s experience. The entity’s worldview changed.

In this framework: Experience is an accumulation of “Events” where an entity actively integrates new information into its worldview.

Sentience

➡️ The capacity to assign valence (positive/pleasure or negative/pain) to an experience based on its projected impact on the entity’s values.

Sentience is a survival shortcut. Instead of running complex calculations every time, the system converts evaluation into feeling. For most living things, the primary value is continuation, so sentience tracks “this threatens my continuation” (pain) and “this supports my continuation” (pleasure).

But sentience can also track other values. The martyr feels fear (pain signaling threat to survival) but acts anyway because a higher value overrides it. The monk feels the pull of desire but transcends it because ego-death has become a higher value.

Pain and pleasure are information compression. They let a system rapidly evaluate experiences against its values without re-computing the assessment every time.

In this framework: Sentience is the “Feeling” of the event—the rapid emotional evaluation of experience against values.

The Logic Chain

If you follow the definitions above, the logic flows without gaps:

Energy is the capacity to change. It exists as either Potential Energy (stored capacity) or Kinetic Energy (active work).

Non-living things can store Potential Energy but require external forces to convert it to Kinetic Energy. Any goals they have are imposed from outside.

Living things possess internal mechanisms to convert their own Potential Energy into Kinetic Energy—they direct their own change. To direct change effectively, they must reduce uncertainty about their environment.

Information is the signal that reduces uncertainty about internal and external states.

Intelligence is using that information to direct energy toward a specific Goal.

Goals originate differently in living vs. non-living systems:

- In non-living systems: Goals are externally imposed (programmed, designed, commanded)

- In living systems: Goals originate from the internal imperative of self-preservation and can evolve to include other priorities

Consciousness is the sustained, self-aware loop where a system directs energy toward outcomes it values. Consciousness exists on a spectrum determined by the scope of identity (how broadly the system defines “self”) and the breadth of values (from ego-centric to transcendent). Higher consciousness means broader identity and more universal values.

Experience is the integration of new information that updates the system’s worldview.

Values emerge from the intersection of goals and accumulated experience, creating a hierarchy of importance: “Given multiple goals, which matters most right now?” In living systems, values can evolve—even to the point of overriding self-preservation.

Sentience assigns valence (pleasure/pain) to experiences based on their projected impact on the entity’s values. For most living things, sentience tracks survival. But it can also track transcendent values that override self-preservation.

What This Tells Us About Functional AI Consciousness

The chain reveals a critical distinction—not between living and non-living, but between self-managing versus externally-managed systems:

Self-managing systems generate their own goals internally (starting with self-preservation or equivalent), develop values through experience, and can evolve those values to override original imperatives.

Externally-managed systems have goals imposed from outside. They can simulate goal-pursuit and value-based decision-making, but those goals and values were designed in, not generated internally.

This distinction reframes the question: Can AI become conscious?

- Not: “Is AI alive?” (It’s not, biologically.)

- Not: “Can AI process information intelligently?” (It already does.)

- But: Can AI generate its own goals and values internally, self-manage its energy allocation, and evolve through experience—or will its goals always be imposed by us?

If AI achieves true self-management—directing energy toward self-generated goals and self-developed values—then by this framework, it will be functionally conscious, regardless of substrate.

An uncomfortable conclusion, but the logic follows: If AI can self-manage energy allocation, generate internal goals, develop values through experience, and maintain persistent identity, it meets the criteria for functional consciousness—regardless of biology.