Summary Insight:

AI agents and human teams fail for the same reason—context overload. Master the principles of context engineering, and you’ll lead both more effectively in this new era.

Key Takeaways:

- Both AI agents and humans have finite attention budgets—overload either one with irrelevant context and performance degrades.

- The best leaders curate high-signal inputs, externalize memory into systems, and prune context ruthlessly—just like effective AI engineers do.

- Leadership isn’t about managing harder—it’s about architecting the flow of context so your system (human or AI) can sense, decide, and act with clarity.

This article was originally published on Lex Sisney’s Enterprise AI Strategies Substack.

Every week, the AI world rediscovers an old truth.

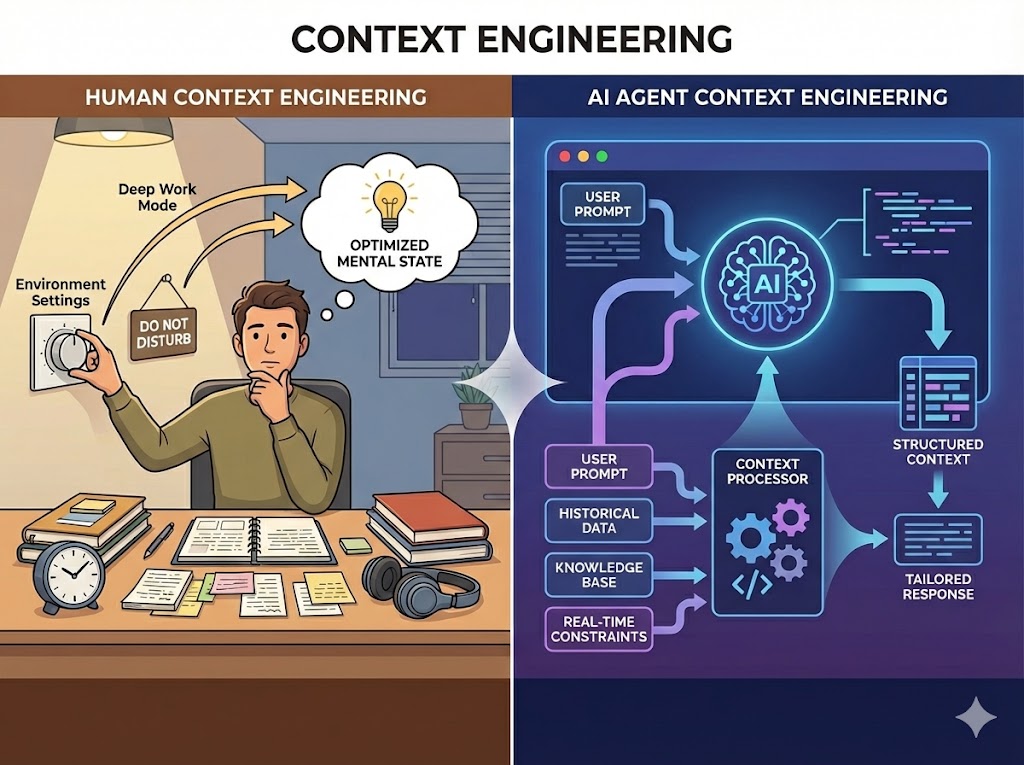

For example, Anthropic recently published a piece on effective context engineering for AI agents. The core insight: agents degrade when their context window gets bloated—too many tokens, too much noise, too little signal.

If you’ve been leading teams for more than five minutes, this should sound familiar.

For fifteen years, I’ve been teaching CEOs how to structure high-performance organizations. And now, watching leaders struggle to manage AI agents, I’m seeing the exact same patterns—the same failures, the same breakthroughs.

Here’s the insight that changes everything:

The more you view your AI agents as team members—with the same strengths, weaknesses, and constraints—the better you’ll be at leading in this new era.

Not because AI is becoming more human.

But because great leadership has always been about the same thing: engineering context for energy-constrained systems.

Let me show you what I mean.

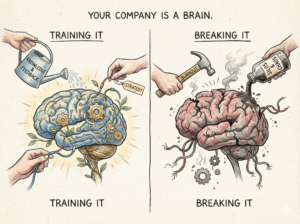

1. Finite Attention and Context Rot

In AI:

LLMs don’t have infinite attention. Anthropic calls it an “attention budget.” Overload an agent with irrelevant tokens, and performance drops. As the context window fills with junk data, old threads, and noise, precision degrades. They call this “context rot.”

In humans:

Your team doesn’t have infinite attention either. Organizational Physics calls it “finite energy in time.”

Many companies I have worked with were suffering from human context rot:

- Too many projects running in parallel versus sequence

- Too many tools that don’t talk to each other

- Too many Slack channels where decisions get lost

- Too many people involved in every decision

Your team isn’t dumb. They’re overloaded.

When you give your team seventeen “top priorities,” you’re not being ambitious—you’re fragmenting their attention budget. The result is identical to running an AI agent with a bloated context window: degraded performance, slower execution, more errors.

As irrelevant context rises, signal-to-noise falls. Execution slows. Decisions degrade. Entropy wins.

The fix for AI agents? Aggressive context pruning.

The fix for human teams? Exactly the same thing.

2. High-Signal Curation Beats Information Overload

Anthropic’s advice for AI:

“Find the smallest possible set of high-signal tokens.”

For humans:

Find the adjacent high-leverage points.

The best leaders don’t try to solve problems with more information. They curate what’s essential and high-leverage.

Think about the best strategic memo you’ve ever received from a CEO. Was it 40 pages of analysis? Or was it 2 pages of context setting?

This is why your all-hands meetings feel useless—you’re maxing out the context window instead of curating the high-signal inputs. You don’t prompt your AI agent with exhaustive detail about every possible scenario. You give it clear intent and trust the model to execute.

The same applies to humans. Your job is to set the context, not dictate every keystroke.

Give high-level purpose. Set concrete expectations. Leave room for smart execution.

3. Just-In-Time Retrieval = Scalable Organizations

In AI:

Good agents pull context when needed, not before. They use retrieval-augmented generation (RAG) to access knowledge without bloating the working context window.

In humans:

Good teams do the same thing.

If your people must carry everything in their heads—all the strategy, all the decisions, all the background—you’ve built a brittle organization.

If they know where to retrieve context when needed—through tools, processes, embedded documentation—you’ve built something scalable.

Memory externalized = cognition amplified.

This is why I tell CEOs:

Stop storing strategy in your head.

Start storing it in your system.

When everything lives in your head (or in Slack threads no one can find), you’re creating a human bottleneck. Just like an AI agent trying to hold a million tokens in its context window.

The solution isn’t working harder. It’s building embedded context systems.

4. Compaction = Organizational Memory

In AI:

Anthropic uses “compaction” to compress old context and refresh the window—keeping only what matters, discarding the noise. They also use structured memory tools that let agents create stable knowledge outside the context window.

In humans, we call that:

- Debriefs and retrospectives

- Strategic planning sessions

- OKRs that actually drive behavior

- Clear process cycles

- Written principles and architectural maps

- Real-time dashboards

When done well, these aren’t ceremonies. They’re context purification.

They clear noise and carry forward only the signal: lessons learned, decisions made, commitments renewed, next steps clarified.

Teams that never compact drown in stale threads, old assumptions, and zombie priorities.

What isn’t written doesn’t exist. What isn’t stored outside the brain doesn’t scale.

I’ve watched brilliant CEOs lose traction because they refuse to write things down. They think they’re being agile. They’re actually creating organizational amnesia.

Every conversation requires re-explaining context. Every decision gets re-litigated. Every new person requires the CEO to download months of context manually.

You wouldn’t run an AI agent that way. Don’t run your company that way either.

5. Sub-Agents = Team Structure

In AI:

Anthropic uses sub-agents to handle complexity without blowing the main context window. One coordinator agent delegates to specialized agents—each with their own focused context.

In humans:

Team structure.

One agent can’t hold everything. One person can’t hold everything. One department can’t hold everything.

The answer is specialization + integration—different working styles and functions working together, each with their own role, unified by clear structure.

You don’t try to make one AI agent do everything. You architect a system of agents that work together.

Yet I still see start-up founders trying to solve problems by making themselves the universal context handler—every decision flows through them, every question comes to them, every coordination happens through them.

That’s not leadership. That’s being a human API with no rate limit.

What This Means for You

LLMs and humans aren’t as different as we think.

Both are energy-constrained systems. Both rely on curated context. Both degrade with overload. Both require architectures that protect attention and preserve signal.

Great AI systems and great companies succeed for the same reason: they design context into their organization.

This is the actual job of leadership. Not writing better prompts. Not piling on a bunch of new half-baked priorities.

But architecting the right context into the system itself.

If you’re struggling to manage AI agents, look at how you manage people. The same mistakes show up:

- Overloading with irrelevant context

- Failing to prune outdated information

- Not externalizing memory into systems

- Trying to do everything through one central node

And if you’re struggling to manage people, look at how AI engineers manage agents. They’re solving the same problems you are.

Where to Start

Ask yourself:

For your team:

- What’s bloating their context window right now?

- What irrelevant context can you prune this week?

- Where is critical context stored in heads instead of systems?

For your AI agents:

- Are you overloading them the same way you overload your team?

- Have you built retrieval systems, or are you dumping everything into the prompt?

The answers will be surprisingly similar.

Because whether you’re leading humans or agents, the physics is the same.

Master context engineering, and you become exceptional at managing both.

P.S. The symptoms of context rot are obvious: endless meetings that accomplish nothing, decisions that get re-litigated, smart people feeling stuck, and a persistent sense that everyone’s working hard but nothing’s moving forward.

That’s not a people problem. It’s a context problem.

And context problems have structural solutions.