Summary Insight:

Google describes how multiple agents can coordinate. But real leverage won’t come from more agents alone—it will come from true organizational intelligence which requires alignment.

Key Takeaways:

- Agents are people too. Really.

- Without organizational alignment, agents amplify chaos, not output.

- Organizational intelligence—not multi-agent plumbing—is the real moat.

This article was originally published on Lex Sisney’s Enterprise AI Strategies Substack.

Google just made two major AI announcements—but the second one matters more than you think.

First came Gemini 3.0, followed almost overnight by their new image generation model. The leap in foundational AI capabilities is impressive, and we’re going to see even more brought to market ever faster by competing hyperscalers.

But there was something else, easily overlooked in the headlines: Google also published their vision for enterprise AI in Introduction to Agents and Agent Architectures. It describes how AI agents can coordinate decisions at scale.

While the hyperscalers will continue to battle for a technical supremacy, there’s a very urgent issue not being addressed: how humans and agents will actually coordinate inside real companies.

Here’s what you need to be thinking about.

What Google Gets Right

A shift from “one big model” to coordinated teams of agents.

Google finally drops the idea of a single universal do-all agent. Instead, they describe networks of specialized agents—researchers, planners, evaluators—working together through shared context and protocols. That mirrors how effective human teams work: distributed roles, communication protocols, feedback mechanisms, continuous improvement, etc.

A modular, multi-model foundation.

The Agent Development Kit (ADK) and the Model Context Protocol (MCP) make the system intentionally open. You can route tasks across different models, tools, and data stores. No lock-in. No “our model or nothing.” That’s the only viable approach in a world with multiple models and fast-moving development.

Governance built in from the start.

Each agent is treated as its own digital actor—with identity, policies, telemetry, and logs. When you have machines executing code and making decisions, you don’t get safety from good intentions. You get it from clear boundaries, observability, and traceability.

In short: Google is showing how to solve the technical plumbing of multiple AI agents.

What’s still unsolved is the human-agent organizational architecture that the plumbing connects to.

Where Machine Intelligence Stops

Google’s architecture assumes that the real system of record is technical: models, tools, agents, and orchestrators exchanging messages through APIs.

But organizations don’t run on APIs.

They run on how energy, decisions, and information flow through the system.

Here’s what this looks like in practice:

Imagine a marketing team where three AI agents coordinate perfectly: one researches competitors, one drafts campaigns, one analyzes performance. Imagine that the technical orchestration is flawless—which as you know, is not always the case when using generative AI.

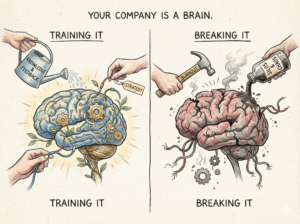

But even with technically perfect agents, if the CMO lacks the right skills and orientation, if decision rights are unclear, if brand strategy keeps shifting erratically, if feedback loops between marketing and product are broken—those agents will optimize toward the wrong outcomes. The technical coordination masks the organizational dysfunction underneath.

This is the fundamental limit of a machine-centric design. It focuses on the interfaces between agents instead of the flows moving through those interfaces. You can have flawless technical agents and still watch the organization fall into entropy—misalignment, friction, rework, and stalled feedback loops—because the underlying system isn’t structured to execute on its mission.

This is where the principles of Organizational Physics become essential.

Google is defining how agents communicate. Organizational Physics is concerned with what actually moves through those channels:

- How fast useful information flows

- Where energy drives productive work or leaks

- How structure accelerates or slows velocity

- How the system sustains itself as complexity increases

Without that lens, you end up with technically correct agents operating inside a physically misaligned organization. The result isn’t more intelligence. It’s faster entropy.

Agents Are People Too

The single greatest mental leap we can make about AI agents is to think of them as human. I know this will bother some people, and I’m not claiming AI is conscious. But as a mental model, it’s remarkably useful.

All humans have potential and follow a lifecycle curve. As they develop, some become highly coherent, driven, adaptive, and exceptional at their core strengths. Others progress more slowly or stall entirely—remaining unfocused, passive, resistant to change, and draining more energy than they create. Every human eventually reaches the end of their curve, whether gradually or suddenly. Such is life.

AI agents follow the same pattern. Some will evolve quickly and excel at specific tasks. Others will remain fragile, brittle, and incapable of much useful work. Like an intern, agents require investment in training. Some will excel. Others won’t.

At the top of your organization, your goal is to build a team of exceptional people—experts at what they do, aligned on ideals, mission, and values—equipped with powerful AI agents and co-pilots that let them coordinate and amplify their impact beyond what was previously possible. With AI, every smart, talented, driven person now has the opportunity to make a superhuman impact. That’s the new reality.

But here’s the reality: how your organization is designed and aligned matters just as much for humans as it does for their agents. You can’t achieve technical alignment without organizational alignment. You can’t leverage machine intelligence without first harnessing organizational intelligence.

Organizational intelligence isn’t just a new data layer. It’s a shared world model—encompassing history, mission, roles, goals, processes, and feedback loops—that keeps every actor in the system, human or AI, operating from the same map of reality.

Designing for Organizational Intelligence

If you think of AI agents as people, you can start treating them that way. This means every AI agent should have a clearly defined role in your structure—with a stated purpose, accountabilities, orientation, decision-making rights, targets, and KPIs. And yes, every AI agent should report to a human who brings sound judgment, coordination, and coaching… just like your leaders do for your human teams today.

Here’s the even better news: the principles required for human organizational alignment are identical to those required for human-AI alignment. There’s no difference.

As a transformation leader, here are the essential alignment levers from Organizational Physics. Notice how each applies equally to managing people and their agents:

Strategy: Define your organization’s longer-range goals and shorter-range tactics for its current stage of development. What’s in, what’s out, what makes you unique and valuable in this evolving market—now and over time.

Culture: What are the key events and people in your organization’s history? What was its founding vision? What are the shared vision and values today—and do they enable high-performance collective action?

Structure: Structure gives shape to the organization. What are the main functions this organization must energize to execute strategy? A breakdown in structure is like a musculoskeletal breakdown in the human body—no amount of effort will drive high performance when alignment is off. Structure should define roles, governance protocols, desired orientation, and communication needs and style for both humans and their agents.

Process: Process is how energy and information flow through structure. A breakdown in process is like disrupted blood flow or neural activity in a human body—the system cannot survive and thrive without addressing it. One often-overlooked element: the process of generating and cascading a single source of truth throughout the enterprise. If a system can’t sense and respond to its environment, it’s already dead. This is why companies with a real data backbone gain speed with AI, while those without an accurate, trusted data layer can’t grow past it.

People & Agents: People, and now agents, bring energy to the system. They perform roles within the structure and bring life to processes in ways that—ideally, by design—support the culture to achieve the strategy.

When done well, these five levers create persistent context for both humans and their agents to execute on the mission. If any lever is missing or misaligned, neither humans nor agents will thrive—and the organization will suffer. Organizational intelligence requires alignment of these core elements to function, just as a human being needs internal coherence to sense and respond intelligently. The only difference is scale.

The CEO’s Takeaway

The pace of AI innovation is fast—probably faster than your current organizational design can digest effectively.

The important question right now, however, isn’t “How will we coordinate multi-agent systems across the enterprise?”

It’s “How will we create and sustain an aligned, intelligent organization that harnesses talented humans empowered to do increasingly useful work with their suite of AI agents?”

Why is this the more important question? Because once AI moves from isolated tools into the bloodstream of daily operations, your company becomes the operating system. Every structural flaw, every ambiguous role, every slow decision loop gets amplified.

In this fast-approaching reality, competitive advantage won’t come from who uses the biggest models or deploys the most agents.

It will come from who builds the most aligned system—an organization designed for superhumans (talented, self-motivated, AI-fluent people) moving in one direction with minimal wasted energy.

That’s the physics of it.

📌 P.S. Technology keeps raising the ceiling. Organizational alignment decides how far you can actually go.