Summary Insight:

AI won’t fix chaos—it reveals it. Your structure, not your stack, decides whether agents scale execution or get thrown out.

Key Takeaways:

- Shared context is the bottleneck for both humans and AI agents.

- Structure drives AI success without losing alignment.

- Most companies will blame AI failure—when structure was the real problem all along.

This week, a public debate unfolded in the AI world between two heavyweights: Cognition, creators of the Devin software development agent, and Anthropic, behind the Claude research and software system. The clash? Architecture. Devin argues, “Don’t Build Multi-Agents.” Anthropic counters, “How We Built Our Multi-Agent Research System.” Both posts are worth your time.

Strip away the tech jargon, and they’re echoing the same truth from different angles. It’s hard to create and maintain shared context at scale. This is as true for AI agents as it is for the human organizations in which they operate.

The Context-Sharing Tax

Consider a one-person startup. The founder juggles everything, and there’s zero context-sharing tax. But scale that startup, and you need specialized talent—or agents. The cost? Aligning those agents skyrockets. The bigger the task, the bigger the organization required. The bigger the organization, the heavier the tax.

Structure is your answer. Always has been.

At its essence, structure is how your business channels energy to execute its strategy. As strategy shifts, structure must evolve, balancing critical tensions: pushing authority to the work’s edge without losing alignment, prioritizing effectiveness (doing the right things) over mere efficiency (doing things right), and safeguarding long-term growth against short-term demands. A robust structure harnesses these forces into momentum. A flawed one chokes execution and amplifies complexity.

People, Processes, and AI Agents

Structure comes alive through people and processes. In high-change, complex environments, processes must keep shared context across the organization. Context isn’t context unless it’s understood and aligned. Creating persistent context for AI agents mirrors this—it’s as critical as aligning human teams.

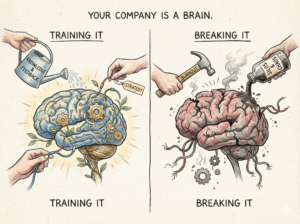

Picture two companies. The first invests in structure and processes, building shared context. Everyone knows the “what,” “why,” and “how”—their role in the flow. The second chases growth but lacks an aligned operating model. Noise, friction, and entropy dominate.

Now, add AI agents. In the first, agents supercharge execution, amplifying clarity. In the second, they magnify the mess. Misalignment worsens. Leaders blame the tech, but the root failure is structural. I predict: most companies will ditch their AI agents by 2025/26, claiming “it didn’t work.” The truth? Their structures were broken, and AI just exposed the cracks.

The Lesson: AI Exposes, Structure Wins

AI won’t fix chaos. It will reveal it. If you’re scaling with AI, multi-agent systems are inevitable (point to Anthropic). The real question: Is your structure ready to deploy and manage them? This isn’t just a tech challenge—it’s a human systems challenge. Start there. Upgrade your structure, embed shared context, and refine processes. Without these, AI agents will amplify disorder. With them, you build an execution machine.